The Growing Importance of AI Ethics

As artificial intelligence becomes more deeply integrated into our daily lives, the ethical implications of how these systems are designed, trained, and deployed have moved from theoretical discussions to pressing practical concerns. AI assistants now help us manage our homes, provide healthcare advice, make financial decisions, and even serve as companions for the elderly and isolated. With this expanded role comes increased responsibility for ensuring these systems operate ethically.

The UK's approach to AI ethics has been evolving rapidly, with the government's AI Roadmap and the establishment of the Centre for Data Ethics and Innovation (CDEI) highlighting the importance placed on responsible AI development. For companies creating AI assistants, these ethical considerations are not merely regulatory hurdles but essential aspects of building trustworthy and beneficial products.

Privacy and Data Protection

Perhaps the most immediately apparent ethical concern for AI assistants is privacy. These systems typically collect vast amounts of personal data to function effectively, from voice recordings and location information to usage patterns and personal preferences. While this data collection enables personalized experiences, it also creates significant privacy risks.

Under the UK's data protection framework, which includes the UK GDPR and the Data Protection Act 2018, AI developers must implement privacy by design principles. This means:

- Collecting only the minimum data necessary for the assistant to function (data minimization)

- Providing clear information about what data is collected and how it's used

- Securing data against unauthorized access

- Enabling users to access, correct, and delete their personal information

- Establishing appropriate retention periods for different types of data

Beyond legal requirements, ethical AI developers are exploring techniques like federated learning, which allows AI models to learn from user data without that data leaving the user's device, and differential privacy, which adds noise to datasets to protect individual privacy while maintaining overall utility for analysis.

Privacy-by-design principles must be incorporated throughout the AI development lifecycle

Addressing Bias and Ensuring Fairness

AI systems, including assistants, learn from data that reflects historical and societal biases. Without careful attention, these systems can perpetuate or even amplify these biases. For example, an AI assistant trained primarily on data from certain demographic groups may provide less accurate or appropriate responses to users from underrepresented groups.

Ensuring fairness in AI assistants requires a multi-faceted approach:

- Using diverse and representative training data

- Testing systems across different user groups

- Implementing fairness metrics and regular bias audits

- Creating diverse development teams that can identify potential biases

- Establishing clear processes for addressing identified biases

The UK's Centre for Data Ethics and Innovation has developed guidance on addressing algorithmic bias, emphasizing the importance of considering fairness throughout the AI development lifecycle rather than as an afterthought.

"The most significant ethical challenges in AI development don't come from malicious intent but from insufficient foresight about how systems will perform across diverse populations and circumstances."

— Professor Amara Wiseman, Oxford Institute for Ethics in AI

Transparency and Explainability

As AI assistants take on more complex tasks and make or recommend important decisions, users need to understand how these systems work and why they make specific recommendations. This transparency is particularly crucial when AI assistants are used in sensitive domains like healthcare, finance, or education.

The challenge is that advanced AI systems, particularly those based on deep learning, often function as "black boxes" where even their developers may not fully understand how they arrive at specific outputs. This tension between capability and explainability represents one of the central ethical dilemmas in modern AI development.

Approaches to addressing this challenge include:

- Developing interpretable AI models that balance performance with explainability

- Creating "explainable AI" (XAI) techniques that can provide post-hoc explanations for complex model outputs

- Setting appropriate levels of transparency based on the context and stakes of AI use

- Providing clear information to users about the capabilities and limitations of AI assistants

Autonomy and Human Oversight

As AI assistants become more capable, questions arise about the appropriate balance between automation and human control. While automation can improve efficiency and consistency, preserving human autonomy and oversight remains essential, especially for consequential decisions.

The European approach to AI regulation, which has influenced UK thinking, emphasizes "human-centered AI" with appropriate human oversight. This principle recognizes that while AI can advise and assist, humans should retain meaningful control over important decisions affecting their lives.

For developers of AI assistants, this means carefully considering:

- Which tasks should be fully automated versus which require human review

- How to design interfaces that clearly communicate when users are interacting with AI

- How to enable users to override AI recommendations when appropriate

- How to ensure AI assistants support rather than undermine human agency

Safety and Reliability

As users increasingly rely on AI assistants for important tasks, the safety and reliability of these systems become critical ethical considerations. An unreliable AI assistant might provide inaccurate medical information, recommend unsafe products, or fail to properly secure a smart home.

Developing safe and reliable AI assistants requires:

- Rigorous testing across diverse scenarios

- Robust quality assurance processes

- Clear communication about system limitations

- Regular updates to address emerging risks

- Mechanisms for users to report safety concerns

The UK's AI Safety Institute, established in 2023, highlights the growing emphasis on ensuring AI systems operate safely and as intended, particularly as they become more capable and autonomous.

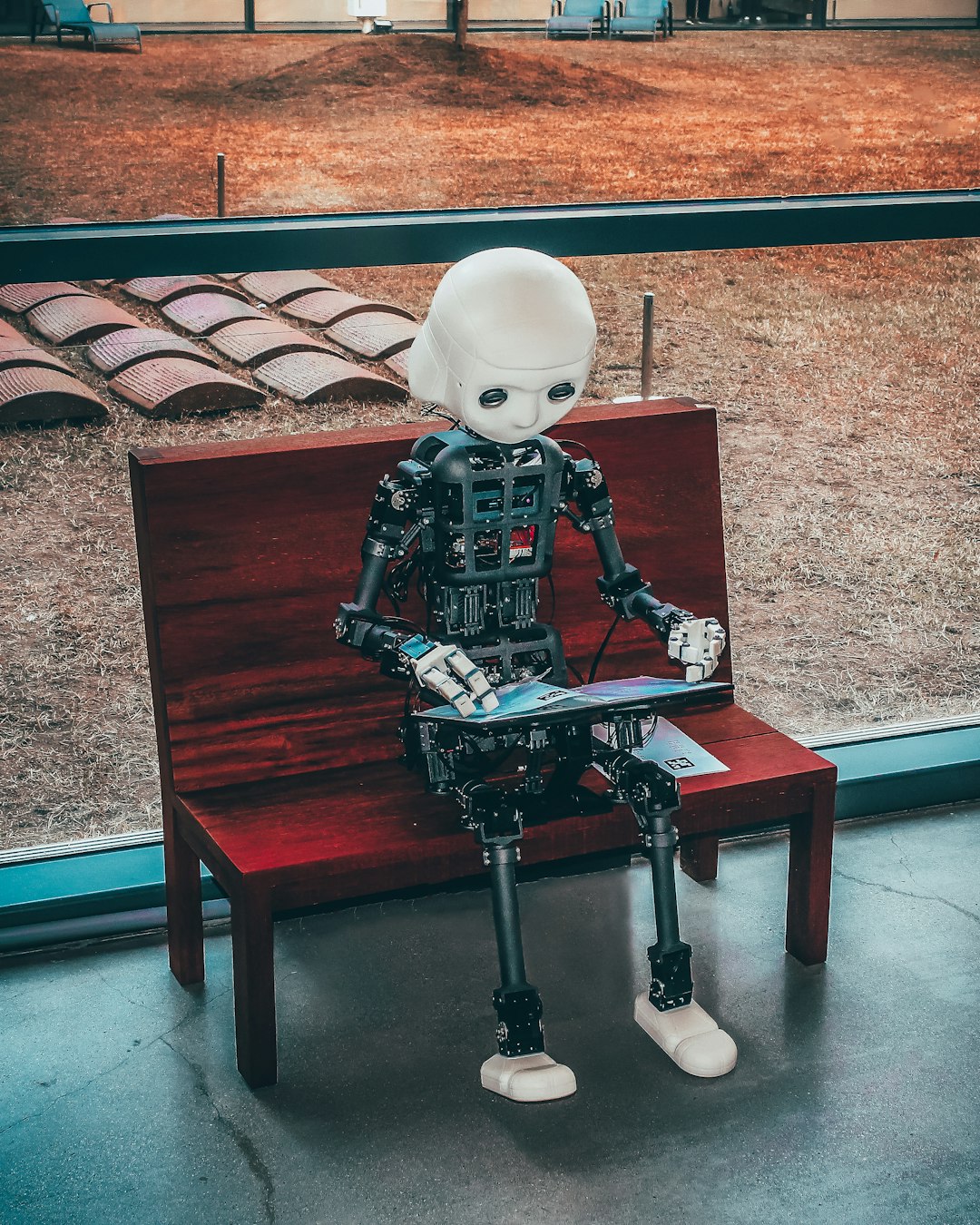

Rigorous testing of AI systems is essential to ensure safety and reliability

Psychological and Social Impacts

Beyond technical considerations, developers must also consider the psychological and social impacts of AI assistants. These systems can shape how people interact with technology and potentially with each other. Key considerations include:

- How AI assistants with human-like characteristics might affect user expectations and relationships

- The potential impacts of AI companions on vulnerable populations, including children and isolated elderly individuals

- How to design AI assistants that encourage healthy technology use rather than dependence

- The broader societal implications of increasingly capable AI systems

Research in this area is still emerging, but responsible AI developers are beginning to incorporate these considerations into their design processes, often working with psychologists, sociologists, and ethicists to better understand the potential impacts of their products.

Building Ethical AI Frameworks

Addressing these ethical challenges requires more than ad hoc solutions; it demands systematic approaches to ethical AI development. Many organizations are now establishing AI ethics frameworks that include:

- Clear ethical principles and values that guide AI development

- Processes for ethical risk assessment throughout the development lifecycle

- Diverse ethics committees or review boards to evaluate sensitive applications

- Regular ethics training for AI developers and product managers

- Mechanisms for stakeholder engagement, including users and affected communities

These frameworks help organizations move beyond viewing ethics as a compliance exercise and instead integrate ethical considerations into their core development practices.

Conclusion: Ethics as Innovation

Far from being a constraint on innovation, ethical considerations can drive the development of better AI assistants that users can truly trust and rely upon. As AI capabilities continue to advance, the companies that succeed will be those that view ethics not as an afterthought but as a fundamental aspect of product quality and user experience.

The path forward involves collaboration among developers, researchers, policymakers, and users to establish and uphold ethical standards that enable AI assistants to fulfill their potential while respecting human values and rights. By addressing these ethical challenges thoughtfully, we can ensure that AI assistants genuinely assist us in building the kind of society we want to live in.

Comments (8)

Leave a Comment

Robert Taylor

May 11, 2024This article raises some crucial points about AI ethics. As someone working in healthcare technology, I've seen firsthand how important transparency is when implementing AI systems. Patients and clinicians need to understand how recommendations are being generated, especially for critical decisions.

Aisha Patel

May 10, 2024I appreciate the discussion on bias in AI systems. Too often these issues are treated as purely technical problems when they're actually deeply social and political. We need more diverse teams developing these systems and more engagement with affected communities throughout the process.